Practical MLOps: Best Practices and an Interactive Example With UbiOps

Can diving into the world of AI deployment give us some interesting insights? In this blog, we unravel the complexities behind bringing AI models from conception to production. Join us as we go through the challenges faced by Data Scientists and Software Developers in transforming AI into tangible products.

Author: Sharadhi Alape Suryanarayana.

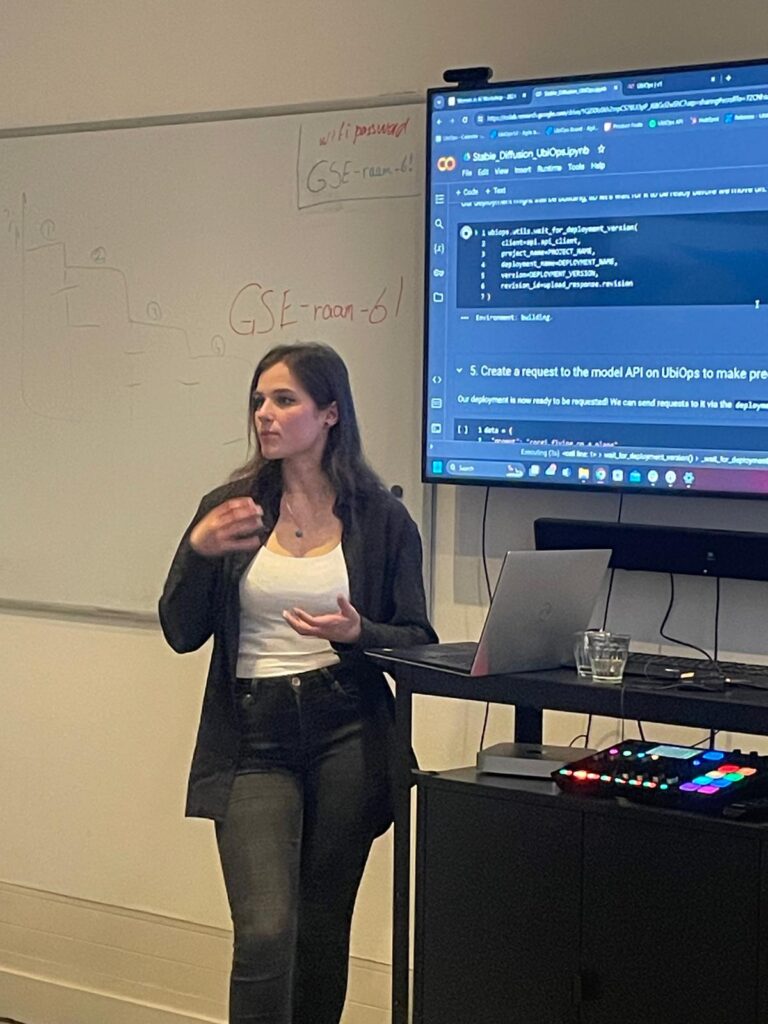

Whether it is about navigating the intricate landscape of ML algorithms to overcoming deployment hurdles, during our March’s circle event we explored it all. Plus, we got an insider’s look into a captivating workshop led by Anouk Dutree, Head of Product at UbiOps, where we demystify the deployment process with a hands-on demo. Sharadhi Alape Suryanarayana was there to report and share her thoughts about this interesting circle event. Whether you’re new to the AI scene or a seasoned enthusiast, her blog promises to captivate and educate. Continue reading to discover the power of AI in shaping our digital future.

UI and ML Model: A seamless experience on both sides?

Everyone including my grandma is talking about AI today. Granted, my grandma would be stretching it too far but you get the point. AI has penetrated and permeated into so many domains that you can no longer ignore it. To that end, AI models in general and ML models in particular have actually been sneaked into UIs such that behind that one ‘Search’ or ‘OK’ button that we click, there are complex Machine Learning (ML) algorithms processing billions of datapoints. And within seconds we are fetched what we would most probably enjoy (you see what I did there!). But, is this experience as seamless behind the screens as it is in the front? What are the challenges Data Scientists and Software Developers have to navigate to transform the ever-pervasive AI into an actual product? Armed with answers to these and many other questions, Anouk Dutree, Head of Product at UbiOps, took to the stage and gave all of us a demo of how ML models are deployed through UbiOps.

Confession time. This was my first Women in AI circle event. Also, I am fairly new to The Netherlands. My excitement, apprehension and a cocktail of other feelings were evident when I reached the Equals studio at 1630 instead of 1730, the scheduled start. But then, visitors started trickling in, small talks were held and we nearly emptied a bowl of potato chips before the event began. Everyone was at a different place in their ‘data journey’. From data analysts, data scientists to sales professionals, the crowd was a mixed but cheerful bunch. We even had insider jokes (Shh!). But every attendee would agree with me when I say Anouk held us hooked through her slides, anecdotes, content and interactive presentation. And with that, it is now time to introduce Anouk!

With a Bachelor’s in Nanobiology and Computer Science, Anouk found her calling in Computer Science. This then led to her doing a Master’s in Computer Science. Today, as the Head of Product at UbiOps, Anouk plays a pivotal role in helping AI models be deployed to ‘Production’ where their real value can be unleashed. An interesting dimension to this very impressive CV is that Anouk co-hosts De Dataloog, a Dutch podcast on Big data, Data Science, Machine Learning and Data-driven transformation. With this creative hat in the right place, it is no surprise that Anouk presented complex topics to a diverse audience with many of her quotes being used in this very blog. When I remarked to her that I am a blogger, she said ‘That’s a nice space to be in’, referring specifically to Data Sciences.

Navigating the landscape of ‘spaghetti infrastructure’: How does UbiOps help ML models see the light of the day?

Developing an ML model today is easier than before. What would earlier entail a sleuth of steps such as Feature Engineering, Data Science workbench and Experiment Tracking, is today simplified to pulling a pre-trained model and fine-tuning it, thanks to the advent of GenAI. Yet, the simplified workflow and the enhanced interest in leveraging AI models in business for long-term sustainability, have not translated to more AI models being deployed. In reality, a staggering 80% of Data Science projects never make it into Production. This primarily stems from the fact that Data Scientists and Engineers come from two different worlds, and the higher the model complexity, the harder it is to deploy. Also, who would be responsible when the model fails upon deployment? A few other factors aggravating this issue are Data Volatility (Can your model handle sudden data changes?), the need for faster iteration cycles (how quick can you update the model if it fails?), model traceability (how was the model made?), scalability (How do you go from 5 GPUs to 100 GPUs?) and the range of infrastructure required to deploy even the simplest of models.

An interesting dimension to this problem comes from the Dutch DIY mindset. While building the infrastructure in-house provides control, it often hinders progress in building valuable models, which impedes AI usage in The Netherlands. Also, if you are not outsourcing MLOps, in addition to the overhead of development time, maintenance and personnel, who is going to be held responsible for any failure during Christmas break? And this is folks where UbiOps comes in. It is Dutch, so yay for the DIYers! And it takes care of the hassle of training, deploying, and serving AI/ML and other Python and R code. You can use UbiOps to create scalable inference API endpoints for your models, train AI models, build pipelines and workflows and manage everything from one place. To put it in simpler terms for non-experts, you might have used DALLE-3 or Midjourney for generating images according to your text prompts. In this workshop, we did the behind-the-scenes of such text-to-image models, where we DID NOT program the entire neural network architecture from scratch, yet managed to generate images from text using the steps illustrated below.

Unravelling the mysteries of AI deployment: A Journey with UbiOps

A well-documented Jupyter notebook was given to us to get a taste of deploying an AI model on UbiOps. In this demo, we deployed the Stable Diffusion model from the HuggingFace StableDiffusion library. It is important to note here that the same could be done from the UbiOps interface as well. Initially, we installed the UbiOps client library, created a project on the UbiOps UI and connected to this through an API token. With this connection established, we now had to set up the environment with the dependencies to run the Stable Diffusion model. This is because UbiOps runs the Python code as a microservice in the cloud. The platform will create a secure container image for your code to run in. To build this image, we defined an environment in UbiOps with all the right dependencies installed to run the Stable Diffusion model. These environments can also be reused for different models.

With the environment for our deployment created, the task then was to run the Stable Diffusion model as well as to push it to UbiOps. This was achieved by defining a ‘Deployment’ class with two methods – __init__ and request(). The __init__ would run when the deployment starts up and can be used to load models, data, artefacts and other requirements for inference. On the other hand, the request() method would run every time a call is made to the model REST API endpoint and includes all the logic for processing data. The separation was to ensure fast model response times. This was followed by creating a UbiOps deployment where the inputs and outputs of the model were defined and creating a version of the deployment where the name, the environment, the instance (CPU or GPU) and its size were defined. With these definitions in place, the code was then packaged and uploaded. Once the deployment was ready, we then could enter text prompts according to our whims and fancies. For demo purposes Anouk had ‘flying corgi’, which was rather harmless, but she also asked for recommendations from the crowd. When I said ‘tourists in Amsterdam’, Anouk remarked that if the image is controversial, it’s the model.

Uniting for inclusive AI innovation

I had once asked my brother (who was also doing his PhD then) on what’s the point in attending presentations when we don’t really understand what’s happening after a few slides. He replied that we attend presentations to be updated on the subject as well as learn how to effectively communicate. This workshop served both the purposes. In addition to getting hands-on experience while deploying a Stable Diffusion model, Anouk showed us how to get everyone hooked on a talk on machine learning. The slides, jupyter notebook and Anouk’s witty remarks were enjoyed by everyone in the crowd. The networking that happened before and after the talk was so refreshing and offered glimpses into how AI is everyone’s topic of interest. In fact, the diverse clientele of UbiOps ranging from DuckDuckGo to detect Deepfakes while opening bank accounts to the Supreme Court of the Netherlands that use LLMs to simplify legal documents is a testament to the pervasiveness of AI.

Be it Anouk’s statement on controversial results or detecting Deepfakes, every positive effect of AI can be easily overshadowed by the negative impacts. And this is why we at Women in AI believe that it is more necessary than ever to facilitate conversations and enable diverse voices to have a say in how AI is employed. To this end, we have a carefully curated list of events that we hope attracts more participants than ever. Do you too want to have a sneak peak into what we do? Do you also want to be a part of the lively crowd that learns, networks and questions? Check out the Circle Events Page and reserve your spot for the next one!